Ever wonder why you choose one food over another? Sure, you might have the reasons you tell yourself for why you picked, say, cage vs. cage free eggs. But, are these the real reasons?

I've been interested in these sorts of questions for a while, and along with several colleagues, have turned to a new tool - functional magnetic resonance imaging (fMRI) - to peak people inside people's brains as they're choosing between different foods. You might be able to fool yourself (or survey administrators) about why you do something, but you're brain activity doesn't lie (at lest we don't think it does).

In a new study that was just released by the Journal of Economic Behavior and Organization, my co-authors and I sought to explore some issues related to food choice. The main questions we wanted to know were: 1) does one of the core theories for how consumers choose between goods of different qualities (think cage vs cage free eggs) have any support in neural activity?, and 2) after only seeing how your brain responses to seeing images of eggs with different labels, can we actually predict which eggs you will ultimately choose in a subsequent choice task?

Our study suggests the answers to these two questions are "maybe" and "yes".

First, we asked people to just look at eggs with different labels while they were laying in the scanner. The labels were either a high price, a low price, a "closed" production method (caged or confined), or an "open" production method (cage free or free range), as the below image suggests. As participants were looking at different labels we observed whether blood flow increased or decreased to different parts of the brain when seeing, say, higher prices vs. lower prices.

We focused on a specific areas of the brain, the ventromedial prefrontal cortex (vmPFC), which previous research had identified as a brain region associated with forming value.

What did his stage of the research study find? Not much. There were no significant differences in brain activation in the vmPFC when looking at high vs. low prices or when looking at open vs. closed production methods. However, there was a lot of variability across people. And, we conjectured that this variability across people might predict which eggs people might choose in a subsequent task.

So, in the second stage of the study, we gave people a non-hypothetical choice like the following, which pitted a more expensive carton of eggs produced in a cage free system against a lower priced carton of eggs from a cage system. People answered 28 such questions where we varied the prices, the words (e.g., free range instead of cage free), and the order of the options. One of the choices was randomly selected as binding and people had to buy the option they chose in the binding task.

Our main question was this: can the brain activation we observed in the first step, where people were just looking at eggs with different labels predict which eggs they would choose in the second step?

The answer is "yes". In particular, if we look at the difference in the brain activation in the vmPFC when looking at eggs with a "open" label vs. an "closed" label, this is significantly related to the propensity to choose the higher-priced open eggs over the lower-priced closed eggs (it should be noted that we did not any predictive power from the difference in vmPFC when looking at high vs. low priced egg labels).

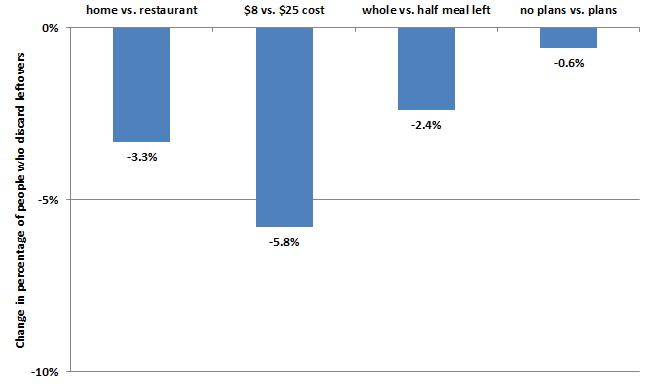

Based on a statistical model, we can even translate these differences in brain activation into willingness-to-pay (WTP) premiums:

Here's what we say in the text:

“Moving from the mean value of approximately zero for vmPFCmethodi to twice the standard deviation (0.2) in the sample while holding the price effect at its mean value (also approximately zero), increases the willingness-to-pay premium for cage-free eggs from $2.02 to $3.67. Likewise, moving two standard deviations in the other direction (-0.2) results in a discount of about 38 cents per carton. The variation in activations across our participants fluctuates more than 80 percent, a sizable effect that could be missed by simply looking at vmPFCmethod value alone and misinterpreting its zero mean as the lack of an effect. ”