I recently completed a survey of over 1,200 U.S. consumers to find out exactly what they think “natural” means when evaluating different foods. The full report is available here and topline results for all questions asked are here (the survey also covered consumers’ perceptions of “healthy” claims, which I’ll blog on later).

Here is the motivation for the study:

“While food companies are allowed to use a “natural” label or claim, the Food and Drug Administration (FDA) has refrained from defining the term. One consequence has been a large number of lawsuits in recent years in which plaintiffs claim to suffer harm from being misled about food product contents or ingredients when accompanied with a natural label (Creswell, 2018). In 2015, the FDA requested public comment on the use of the term natural in food labeling, signaling a potential move to define the term. Such events suggest the need for more information about how food consumers perceive and define the term natural. ”

One of the initial queries was an open-ended question which asked, “What does it mean to you for a food to be called ‘natural’?” Here is a word cloud constructed from the responses.

Words like artificial, additive, chemical, and organic were most commonly mentioned. More than 10% of respondents specifically mentioned the word artificial. A non-trivial share of respondents suggested the word was meaningless, marketing hype, or that they did not know what the word meant.

Respondents were also provided a list of possible words/definitions and asked which best fit their definition of natural. No preservatives and no antibiotics/hormones topped the list.

Despite associating preservatives with lack of naturalness, when asked about specific preservatives, responses are more nuanced. Preservation by canning and with sugar/salt/vinegar were perceived by more people as natural than not-natural, whereas preservation with benzoates/nitrites/sulphites was not.

To hone in on which processes/foods people consider natural vs. not natural, they were shown the following figure. Respondents were asked “Which of the following foods or processes do you consider to be natural? (click up to 5 items on the image that you believe are natural).” The question was repeated except “natural” was replaced with “NOT natural.”

You can find some colorful heat-maps of the resulting clicks in the full report. Here, I’ll just note that about half of respondents (47.1%) clicked on the image of the raw commodities as being natural. The next most commonly clicked areas, chosen by between 20% and 30% of respondents, was grits/oatmeal, wash/clean, and wash/grind/slice. Even after showing the processes involved, 19.8% clicked vegetable oil as natural and 13.3% clicked flour as natural. By contrast, “Bleach” was most most frequently clicked (by 33.8% of respondents) as not natural, followed by “Crystalize”, and then alcohol, syrup, and sugar.

A curious result revealed is that, in many case, final foods are often considered more natural than the processes which make them. For example, more people clicked alcohol as natural than clicked fermentation as natural. Vegetable oil was perceived as more natural than pressing or bleaching, both processes which are used to create this final product. Similarly, sugar is perceived as more natural than crystallization, but of course, the latter is necessary to produce the former. These findings suggest that it is possible for a final product to be considered natural even if a process used to make the product is not.

I also asked questions about crop production processes and perceptions of naturalness.

About 80% more respondents said organically grown crops were natural as said such crops were not natural. Crops grown indoors and that are hydroponically grown were, on net, seen as more natural than not. All other crop production practices were rated as not natural by more respondents than were rated as natural. Thus, the results suggest consumers are skeptical of the naturalness of most modern crop production practices. Curiously, this is true for use of hybrid seeds. Crops produced with biotechnology were much more likely to be considered not natural than natural. Consumers perceived organic as natural, but not the pesticides used in organic agriculture or the methods (i.e., mutagenesis) used to create many organic seeds. Again, these findings suggest that it is possible for a final product to be considered natural even if a process used to make the product is not; in this case, the finding is likely to result from a lack of knowledge about organic production practices.

On the topic of misperceptions, just because a federal definition of natural exists does not mean consumers know or understand the definition. The USDA currently defines “natural” for meat products, and it is primarily defined as “minimally processed.” However, only about a quarter of respondents in this survey (26.6%) correctly picked this definition when asked how the USDA defines the term. More than 30% of respondents incorrectly believed the USDA definition of natural implies “no hormones” and 23.8% thought a natural label implies “no antibiotics.” These data suggest more than half of respondents are misled by the USDA definition of natural, a result supported by the other recent academic research.

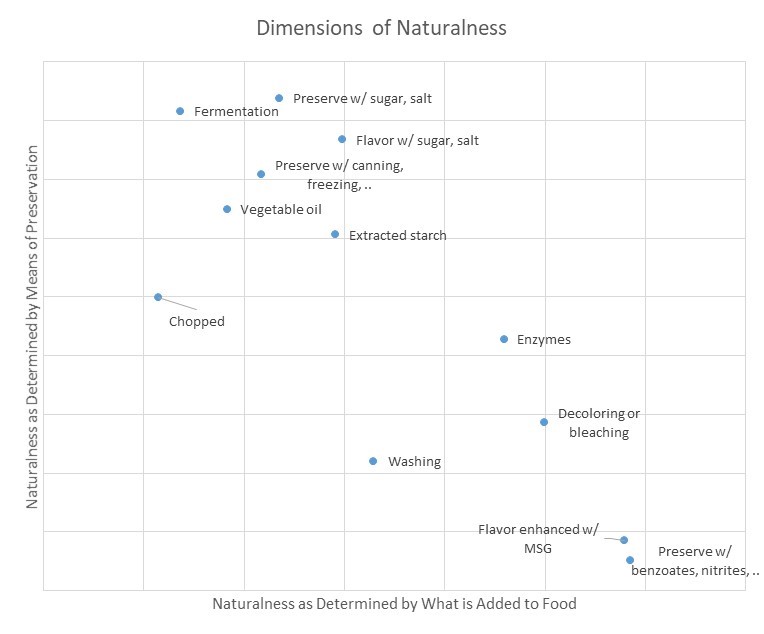

There is a lot more in the detailed report, including more information on question wording and methods of analysis. For example, analysis of correlations between responses (via factor analysis), suggests “natural” is not a single monolithic construct in consumer’s minds, but rather is multidimensional. A food or process can be considered natural on one dimension but not another, as shown in the following figure.

Thanks to the Corn Refiners Association, who funded this survey. They gave me free reign to ask the questions and analyze the data as I wanted. You can see their interpretation of the results and their policy recommendations here.