Yes, Yes, the title of the paper says “association” not “causation.” But, of course, that didn’t prevent the authors - in the abstract - from concluding, “promoting organic food consumption in the general population could be a promising preventive strategy against cancer” or CNN from running a headline that says, “You can cut your cancer risk by eating organic.”

So, first, how might this be only correlation and not causation? People who consume organic foods are likely to differ from people who do not in all sorts of ways that might also affect health outcomes. As the authors clearly show in their own study, people who say they eat a lot of organic food are higher income, are better educated, are less likely to smoke and drink, eat much less meat, and have overall healthier diets than people who say they never eat organic. The authors try to “control” for these factors in a statistical analysis, but there are two problems with this. First, the devil is in the details and the way these confounding factors are measured and interact could have significant effects. More importantly, some of these missing “controls” are things like overall health consciousness, risk aversion, social conformity, and more. This leads to a second more fundamental problem. These unobserved factors are likely to be highly correlated with both organic food consumption and cancer risk, and thus the estimated effect on organic is likely biased. There are many examples of this sort of endogeneity bias, and failure to think carefully about how to handle it can lead to effects that are under- or over-estimated and can even reverse the sign of the effect.

To illustrate, suppose an unmeasured variable like health consciousness is driving both organic purchases and cancer risk. A highly health conscious person is going to undertake all sorts of activities that might lower cancer risks - seeing the doctor regularly, taking vitamins, being careful about their diet, reading new dietary studies, exercising in certain ways, etc. And, such a person might also eat more organic food, thus the correlation. The point is that even if such a highly health conscious person weren’t eating organic, they’d still have lower cancer risk. It isn’t the organic causing the lower cancer risk. Or stated differently, if we took a highly health UNconscious person and forced them to eat a lot of organic, would we expect their cancer risk to fall? If not, this is correlation and not causation.

Ideally, we’d like to conduct a randomized controlled trial (RCT) (randomly feed one group a lot of organic and another group none and compare outcomes), but these types of studies can be very expensive and time consuming. Fortunately, economists and others have come up with creative ways to try to address the unobserved variable and endogeneity issues that gets us closer to the RCT ideal, but I see no effort on the part of these authors to take these issues seriously in their analysis.

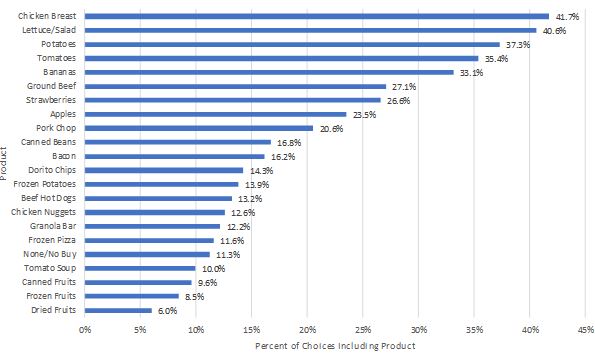

Then, there are all sorts of worrying details in the study itself. Organic food consumption is a self-reported variable measured in a very ad-hoc way. People were asked if they consumed organic most of the time (people were given 2 points), occasionally (people were given one point), or never (no points), and this was summed across 16 different food categories ranging from fruits to meats to vegetable oils. Curiously, when the authors limit their organic food variable to only plant-based sources (presumable because this is where pesticide risks are most acute), the effects for most cancers diminishes. It is also curious that the there wasn’t always a “dose response” relationship between organic consumption scores and cancer risk. Also, when the authors limit their analysis to particular sub-groups (like men), the relationship between organic consumption and cancer disappears. Tamar Haspel, a food and agricultural writer for the Washington Post, delves into some of these issues and more in a Tweet-storm.

Finally, even if the estimated effects are “true”, how big and consequential are they? The authors studied 68,946 people, 1,340 of whom were diagnosed with cancer at some point during the approximately 6 year study. So, the baseline chance of any getting any type of cancer was (1340/68,946)*100 = 1.9%, or roughly 2 people out of 100. Now, let’s look at the case where the effects seem to be the largest and most consistent across the various specifications, non-Hodgkin lymphomas (NHL). There were 47 cases of NHL, meaning there was a (47/68,946)*100 = 0.068% overall chance of getting NHL in this population over this time period. 15 and 14 people, respectively, in the lowest first and second quartiles of organic food scores had NHL, but 16 people in the third highest quartile of organic food consumption had HCL. When we get to the highest quartile of stated organic food scale, the number of people with HCL now dropped to only 2. After making various statistical adjustments, the authors calculate a “hazard ratio” of 0.14 for people in the lowest vs. highest quartiles of organic food consumption, meaning there was a whopping 86% reduction in risk. But, what does that mean relative to the baseline? It means going from a risk of 0.068% to a risk of 0.068*0.14=0.01%, or from about 7 in 10,000 to 1 in 10,000. To put these figures in perspective, the overall likelihood of someone in the population dying from a car accident next year are about 1.25 in 10,000 and are about 97 in 10,000 over the course of a lifetime. The one-year and lifetime risk from dying from a fall on stairs and steps is 0.07 in 10,000 and 5.7 in 10,000.

In sum, I'm not arguing that eating more organic food might not be causally related to reduced cancer risk, especially given the plausible causal mechanisms. Rather, I’m arguing that this particular study doesn’t go very far in helping us answer that fundamental question. And, if we do ultimately arrive at better estimates from studies that take causal identification seriously that reverse these findings, we will have undermined consumer trust by promoting these types of studies (just ask people whether they think eggs, coffee, chocolate, or blueberry increase or reduce the odds of cancer or heart disease).