One of the most basic measures of well-being is whether people have enough food to eat. Whether the U.S. does well in this regard seems to depend on who you ask. There are many people in the so-called food movement who seem to think we're doing ok on this front and that food is actually too cheap. There are other groups like Feeding America that think hunger is a serious concern and are doing what they can to reduce it.

The USDA Economic Research Service produces the most widely used measure of hunger (or as they call it "food security"). According to their data:

“An estimated 12.7 percent of American households were food insecure at least some time during the year in 2015, meaning they lacked access to enough food for an active, healthy life for all household members. That is down from 14.0 percent in 2014.”

This figure shot up during the great recession (reaching a high of 14.9% of households in 2011) but has subsequently fallen a bit as indicated above, but still remains higher than was the case prior to 2008 when it was regularly in the 10 to 11% range.

I was curious how the sample of people I study every month in my Food Demand Survey (FooDS) matches up with these official government statistics. In the most recent April 2017 edition of FooDS, we added some questions (the short 6-item measure) based on work by the USDA to measure food insecurity. As an example, one of the questions is "'The food that I bought just didn’t last, and I didn’t have money to get more.' Was that often, sometimes, or never true for you/your household in the last 12 months?"

Data from FooDS reveals a strikingly high level of food insecurity - much higher than what the USDA reports. According to the criteria outlined at the above link, we found a whopping 46.7% of respondents were classified as having low or very low food security (22.9% of the sample had low food security and 23.8% had very low food security).

My first thought was that we must have made a mistake in how we asked the questions or in how we analyzed the data. We ruled out those possibilities. My second thought was that maybe my survey sample is really different from the U.S. population. After all, who is willing to sign up to take online surveys? Maybe people who really need the money and who are thus more likely to be food insecure. But, this couldn't be the complete answer because I use weights to force my sample to match the U.S. population in terms of age, education, gender, and region of residence, and the average income of my sample isn't much different from the average income of the country as a whole. Maybe the difference is that I used a 6-item measure of food insecurity rather than the full 18 items used by the USDA (but previous research has found strong agreement between the two).

When I mentioned this quandary to my friend Bailey Norwood, he knew immediately what was causing part of the the discrepancy, and I think it could have a big impact on how we fundamentally view the food security measures reported by the USDA.

In short, the USDA assumes that if you make enough money you can't be food insecure [*Addendum, this original sentence, as stated, was too strong. As the quote below suggests, you can't be classified as food insecure if you're high income AND if you answer two preliminary questions on food insufficiency in particular way. Some researchers in this area emailed me to note that about 25% of food insecure households have incomes at least 300% of the poverty line]. In their latest report, they indicate in footnote 5:

“To reduce the burden on higher income respondents, households with incomes above 185 percent of the Federal poverty line that give no indication of food-access problems on either of two preliminary screening questions are deemed to be food secure and are not asked the questions in the food security assessment series. ”

What if I take my FooDS data and just assume anyone that has an income that puts them at 185% of the poverty line (based on these criteria) is food secure despite the answers they gave on the survey? (note: my calculations are crude because I only measure household income in wide $20,000 ranges and I simply assign people to the midpoint of the income range they selected).

When I do this, I find that now "only" 22% are classified as having low or very low food security (9% of the sample had low food security and 13% had very low food security). That's still a lot higher than what the USDA reports, so maybe my internet survey still has some sample selection issues. However, it's still HALF the original measure.

What does this mean? There are a lot of relatively high income people that would be classified as food insecure if the USDA simply asked them the same questions as everyone else. There are a lot of relatively high income people that say "yes" to questions like "In the last 12 months, did you ever eat less than you felt you should because there wasn't enough money for food?"

None of this to say that income isn't a determinant of food security, but that it shouldn't be the only signal, particularly if someone is in a lot of debt or if they have large households, they could still be going hungry.

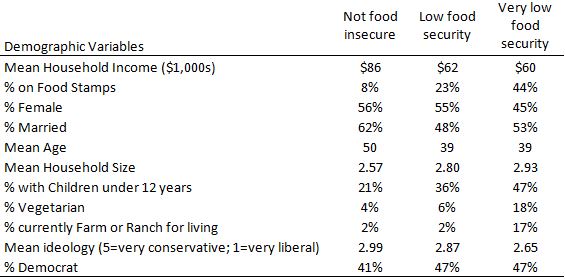

In any event, here are some of the demographic characteristics of the people who, according to my sample (and without making the above discussed income correction), classify as being food secure, low food security, or very low food security.

As the above table indicates, income matters as the average income of food secure households is $86,000/year. However, households with low food security still average $60,000/year, which is far above 185% of the poverty level for most households.

Households that classify as very low food security are much more likely to be on SNAP (aka food stamps). Of course, this isn't causal: being on SNAP isn't causing food insecurity but likely the other way around. Two other noteworthy results. Households classified as having very low food security are much more likely to 1) have children in the household and 2) report farming or ranching as a primary occupation.